Good afternoon. It has been too long since I last posted. Today I found a solution to a problem I have seen several times and I wanted to share it.

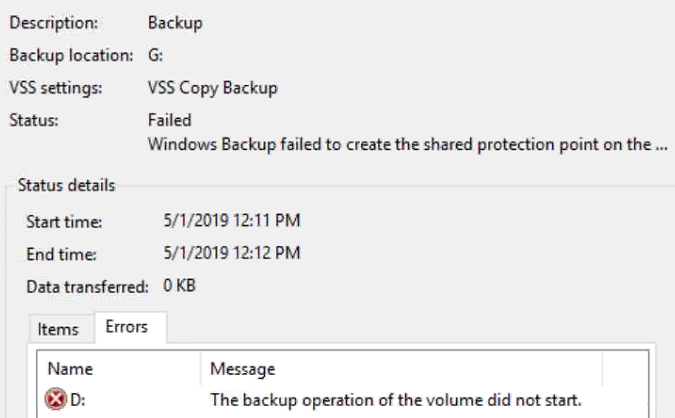

I had a customer that was experiencing backup issues with a new load of Windows. When trying to backup the server in Windows Serer Backup the backup would always fail with the error “Windows Backup failed to create the shared protection point on the …”. An important point to note is the error would always occur during the VSS snapshot phase of the backup.

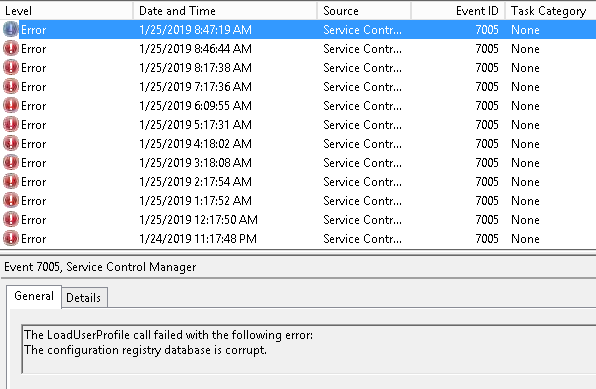

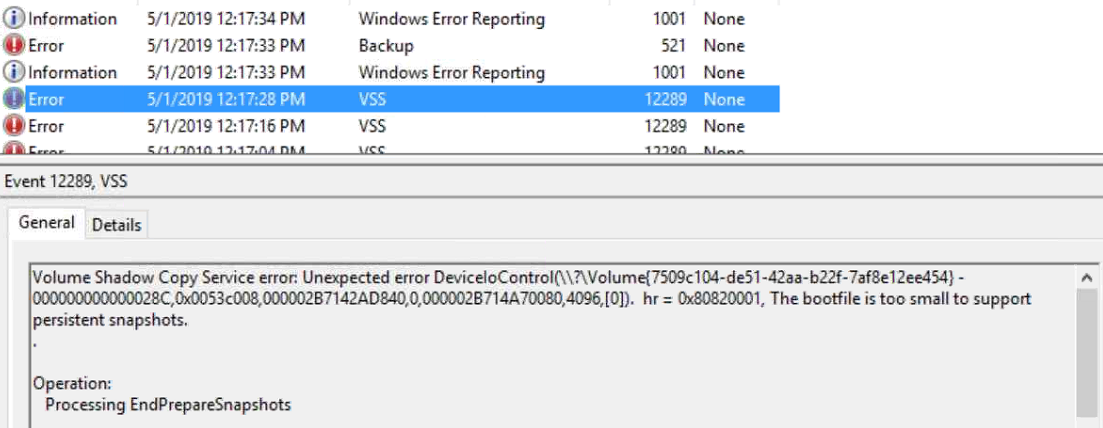

Below is the resulting Application event log with the key event highlighted.

At this point it is probably helpful to get a high level overview of how Windows Server Backup and VSS work. When Windows Server Backup starts a backup one of the first steps is to call VSS to take a snapshot. When the backup destination is local disk, the request is for both the backup destination and the backup source. This is so that Windows Server Backup can compare the blocks in both to perform an incremental backup. This means that a failure to snap the source or destination can cause the backup to fail.

I have seen this issue a handful of times and the consensus was the backup drive was causing the problem. While this can be the case, today I learned how to pinpoint which volume is actually causing this error with the event log. The key to determining this is the volume GUID ( Globally Unique Identifier) specified in the description of the event. This is the volume that cannot be snapped by VSS and is causing the backup to fail.

So how do you take the GUID and get the drive letter? This is the easiest part. Simply open an admin cmd window and run the command “mountvol”. At the end of the output all volumes with GUIDs and drive letters will be listed. In our case it was the D:\ drive that contained user data. We ran a test backup excluding the D:\ drive and it completed with no errors.

How do I fix the volume, so it will backup? Obviously we will not want to exclude a volume from the backup. There are two methods to repair this issue. First a chkdsk /f can be run to attempt and repair the volume. If that fails though, then you are likely looking at a bit of work to recreate the volume. Here is the process:

- Backup the data with robocopy or another file level backup utility. For robocopy an example command: robocopy <source> <destination> /MIR /XJ /W:5 /R:3 /LOG+:c:\robolog.txt

- Run diskpart and “clean” the disk. To do this run diskpart at an admin cmd, select the problem disk, then run the clean command.

- Recreate the volume

- Restore the data with robocopy or whatever file level backup utility used previously.

I hope you have found this post informative. If you have another way to solve this problem I would love to hear about it in the comments.